S3 Upload: the simplest possible in Java

Recently I’ve been in QConSP 2013, where I met many friends and talked a lot. Talking to Milfont, he told me he thought nobody was doing regular file uploads anymore, since S3 is very convenient and easy to use. In his Ruby projects he uses Paperclip, which allows S3 uploads with simple configurations.

However, in our Amazon projects we see that most applications aren’t using S3 yet, and this got me motivated to write some posts showing the simplest possible S3 uploads in several languages, frameworks and products. This one is the first in the series, and here we’ll show a regular file upload and the equivalent one using S3. For TL;DR version, simply clone the project in github and try it yourselves.

git clone https://github.com/RivendelTecnologia/UploadS3Java.git

In WebContent folder there are 2 simple htmls, both with forms with a single element: a file upload. disco.html file points to Servlet /UploadDisco, which manipulates input stream the old fashioned way (with o commons-fileupload) e saves to disk, just like below.

protected void doPost(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

try {

List<FileItem> items = new ServletFileUpload(new DiskFileItemFactory()).parseRequest(request);

for (FileItem item : items) {

if (item.isFormField()) {

// nada a fazer, só temos o upload de arquivo no formulário

} else {

// Process form file field (input type="file").

InputStream conteudoArquivo = item.getInputStream();

File arquivo = new File(item.getName() + System.currentTimeMillis());

FileOutputStream fos = new FileOutputStream(arquivo);

int read = 0;

byte[] bytes = new byte[1024];

int contador = 0;

while ((read = conteudoArquivo.read(bytes)) != -1) {

fos.write(bytes, 0, read);

contador++;

System.out.println("escrevendo " + contador);

}

System.out.println("Pronto! Arquivo: " + arquivo.getAbsolutePath());

conteudoArquivo.close();

fos.flush();

fos.close();

response.getWriter().println("Arquivo escrito: " + arquivo.getAbsolutePath());

}

}

} catch (FileUploadException e) {

throw new ServletException("Cannot parse multipart request.", e);

} finally{

}

}

In order to use S3, we need to download Java SDK and add it to our dependencies, or useMaven instead. S3 features require some Apache Commons libs.

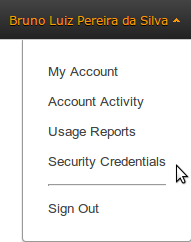

Besides having Amazon SDK in classpath, we need to add a file AwsCredentials.properties, with your accessKey e secretKey. Both can be found in Security Credentials option, at AWS console, just like below.

.

.

The last thing we need is to have an S3 bucket available for the upload. We cloud create a new bucket through the API as well, but the easiest and most common choice is to use an existing bucket.

We have below the Servlet receiving the file upload and sending it straight to S3, without local file system operations.

protected void doPost(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

AmazonS3 s3 = new AmazonS3Client(new ClasspathPropertiesFileCredentialsProvider());

Region usWest2 = Region.getRegion(Regions.US_WEST_2);

s3.setRegion(usWest2);

String nomeBucket = "rivendel-upload-s3";

String nomeArquivo = "";

try {

List<FileItem> items = new ServletFileUpload(new DiskFileItemFactory()).parseRequest(request);

for (FileItem item : items) {

if (item.isFormField()) {

// nada a fazer, só temos o upload de arquivo no formulário

} else {

// Process form file field (input type="file").

InputStream conteudoArquivo = item.getInputStream();

ObjectMetadata metadata = new ObjectMetadata();

metadata.setContentType(item.getContentType());

metadata.setContentLength(item.getSize());

nomeArquivo = item.getName();

PutObjectRequest por = new PutObjectRequest(nomeBucket, nomeArquivo, conteudoArquivo, metadata);

PutObjectResult result = s3.putObject(por.withCannedAcl(CannedAccessControlList.PublicRead));

response.getWriter().println("Arquivo escrito: " + nomeArquivo);

}

}

} catch (FileUploadException e) {

throw new ServletException("Cannot parse multipart request.", e);

} finally{

}

}

In this upload, we send the file to a bucket called rivendel-upload-s3, and the define its access policity as Public Read. This way, everyone can download the file from S3 without authentication. With the name of the region and the name of the bucket, it’s possible to create the file access URL, in this format: https://s3-region-name.amazonaws.com/bucket-name/file-name.

Soon we’ll publish similar posts with examples in other languages. I hope this will prove useful to many people :). See ya next!

Gostou do conteúdo? Tem alguma dúvida? Entre em contato com nossos Especialistas Mandic Cloud, ficamos felizes em ajudá-lo.